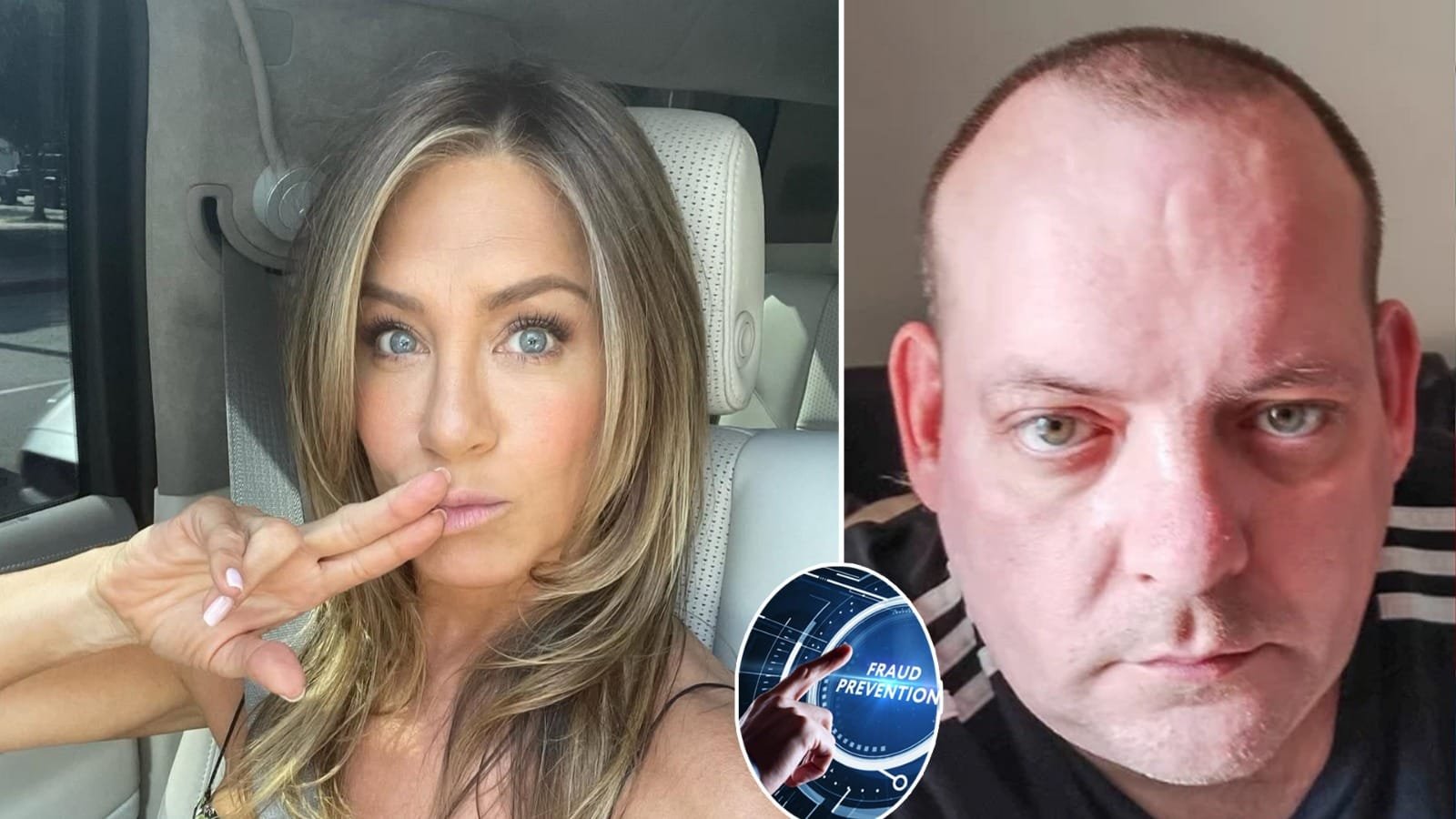

In a shocking demonstration of how technology is enabling modern fraud, a man based in the UK was fooled into sending hundreds of pounds to criminals impersonating actress Jennifer Aniston. The intricate scam—complete with AI driven selfies, audio recordings and even a fake ID—occurred over a period of months, revealing an even murkier, more emotionally exploitative side to society’s AI boom.

The man at the center of the scam, Paul Davis, was first contacted on Facebook by someone claiming to be the Friends star. The messages developed quickly into romantic terms—”my love”, flirty emojis, and sweet nothings in the middle of the day. What made it authentic? The con artist sent what appeared to be selfies of Aniston, a copy of her driver’s license and audio recordings of her tone.

“I believed it—and I paid. They told me she loved me.”

After expressing an emotional attachment, Paul was asked to send Apple gift cards, allegedly to assist the actress in renewing her subscriptions. Thinking he was in a private relationship with one of Hollywood’s most well known stars, Paul did it. The heartbreaking part is he found out much later.

Celebrity scams are not new, but the technology is. Paul told me that this was not an isolated case. In the past five months, he said he received messages and videos impersonating other high-profile people including Elon Musk and Mark Zuckerberg.

In one clip, Zuckerberg appears to flash an ID and insist, “This is not a scam.”

“They were so real, anyone could fall for it,” Paul said. “Someone I know lost over a thousand pounds in gift cards the same way.”

Another one promised Paul a Range Rover and a cash prize. The timbre and cadences of voices, mannerisms, and physiognomy were as astonishingly realistic as can be.

These scams also exploit a lethal combination of emotional engineering and contemporary technology. Here’s why they seem so plausible:

AI-generated images and videos that mirror the facial nuances of celebrities

Synthetic voice recordings of publically available interviews

Fake IDs and documentation synthesized in graphics software

Psychological ploys, often harvested from private personal information from social media

And not just celebrities. Some scams go even further—misrepresenting a victim’s friends or relatives in distress situations, contrived emergencies to extract money under duress.

Though it is tragic, Paul’s account is not uncommon. In one particularly well-publicized case, a French woman was allegedly swindled by £700,000 by a fraudster impersonating actor Brad Pitt, claiming that he needed the money to treat his cancer.

These stories may often be dismissed as the result of gullibility and loneliness, but researchers point out that when emotion and realism are combined together as weapons, anybody can fall prey to them—regardless of their level of education, or age.

Warning Signs:

If you get an unexpected message from someone you don’t know, whether it be a public figure or a more personal contact, here are some red flags to look for:

Requests for Apple gift cards, cryptocurrency, or other untraceable money transfers.

Messages coming from accounts that look official, but aren’t verified

Asking for romance or urgency soon escalates

Use of AI, such as pictures, videos, or voice notes

Offers that are too good to be true, prizes, secret relationships, investments

Experts recommend verifying identities in a trusted offline manner—such as a phone call or in-person. The best rule is to do your research before engaging or sending money.

Despite numerous cases, many social media companies still have not implemented an effective way to flag or block deepfake-driven scam messaging. Media tools to help detect synthetic media are now in development, and moderation policies can never work as fast as fraudsters can invent new tactics.

The regulators in the UK and other countries have recommended that companies label AI-generated content clearly, and increase protections for users—but enforcement and monitoring seem haphazard at best.